Trying OKD4: home lab needs to grow

I've spent last week tinkering with OKD4, which is open source base for OpenShift. OpenShift in turn is Red Hat's distribution of Kubernetes. Such opensource/commercial differentiation is quite popular among Red Hat products. OKD/OpenShift relation is like AWX/Ansible Tower, Spacewalk/Satellite, WildFly/JBoss, oVirt/RHEV etc.

I already had previous version deployed - 3.11, then called Origin, not OKD. My cluster consists of two old ThinkPads and a virtual machine, and I was planning to redeploy OKD4 on them. But first some PoC on virtual machines.

So I started with minimal viable cluster – 3 schedulable master nodes. (There's a Code Ready Containers version, too – 1 node cluster, but it's non-upgradable). Requirements table looks scary – 4 CPU cores and 16GiB per master – but that's probably an overkil, right?

As it turned out, I won't be able re-use my legacy hardware to host new cluster. Oh boy, OKD4 is massive.

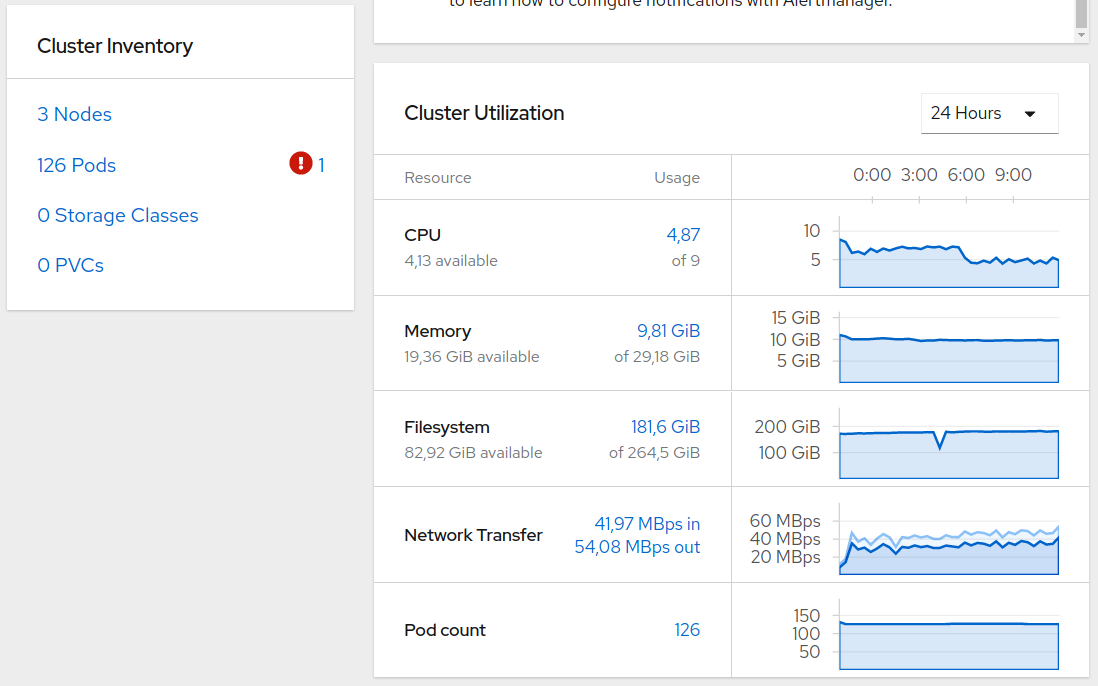

That's the cluster dashboard just after the installation. For all practical purposes it's an empty platform, I haven't deployed any of my stuff yet. Almost 5 CPU cores used and 10 gigs of memory. Huh.

My VMs setup is below minimal requirements, each has 10GiB of RAM and

3 CPU cores. I'm used to think that you can't skimp on memory, but

you can assign fewer CPU cores – at worst everything would be slower.

That won't fly with OKD. Components define CPU needs with requires:

sections. If you don't deliver, you'll get admission errors and the

pods will not run.

So my home lab would need to be expanded with 64GiB of RAM and some 16 threads CPU, just for OKD. Speaking of CPU…

I'm seriously taken-aback with CPU usage of this empty cluster. I don't know exactly what's using so much. There is Prometheus scraping metric, one CronJob and a handful of healthchecks, that's all. Everything should be event-driven and just be idle when not running any workload. Right now OKD wastes tremendous amount of power just to do nothing.

Enough complaining. I like how OKD is managed and configured.

OKD owns the master nodes, including operating system (Fedora CoreOS). Everything is managed from within the cluster, and configured like normal Kubernetes resources - via YAML. LDAP connectivity, custom logo, apiserver's TLS certificates… create a ConfigMap, update a field in resources definition and it just happens.

I much prefer eventual consistency, asynchronously achieved by k8s operators, to

using openshift-ansible. The latter touches everything and tends to break when something

isn't 100% right. Which is cumbersome when each run takes 40+ minutes.

With OKD you have clean bootstrapping (with ignition config served by bootstrap k8s node – how cool is that?) and you receive basic working cluster, which you customize using kubernetes YAMLs.

Documentation is also very nice and seem to cover every common (and some less common) case.

Tomasz Torcz

Tomasz Torcz

Comments

Comments powered by Disqus